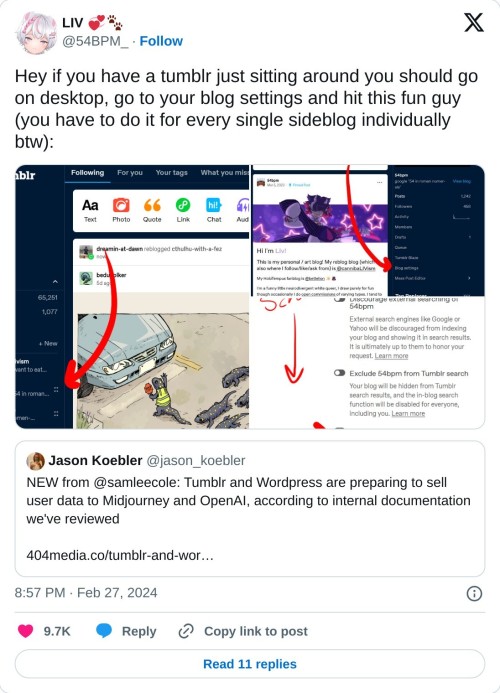

A Twitter Thread On How To Protect Your Art From Tumblr's Agreement To Sell It Off To Artificial Intelligence

A Twitter thread on how to protect your Art from Tumblr's agreement to sell it off to Artificial Intelligence data gathering pools, please protect yourselves!

The replies include how to do it depending on your platform.

-

fitzlblick liked this · 10 months ago

fitzlblick liked this · 10 months ago -

beardset reblogged this · 10 months ago

beardset reblogged this · 10 months ago -

toadmushroom liked this · 10 months ago

toadmushroom liked this · 10 months ago

More Posts from Toadmushroom

The conversation around AI is going to get away from us quickly because people lack the language to distinguish types of AI--and it's not their fault. Companies love to slap "AI" on anything they believe can pass for something "intelligent" a computer program is doing. And this muddies the waters when people want to talk about AI when the exact same word covers a wide umbrella and they themselves don't know how to qualify the distinctions within.

I'm a software engineer and not a data scientist, so I'm not exactly at the level of domain expert. But I work with data scientists, and I have at least rudimentary college-level knowledge of machine learning and linear algebra from my CS degree. So I want to give some quick guidance.

What is AI? And what is not AI?

So what's the difference between just a computer program, and an "AI" program? Computers can do a lot of smart things, and companies love the idea of calling anything that seems smart enough "AI", but industry-wise the question of "how smart" a program is has nothing to do with whether it is AI.

A regular, non-AI computer program is procedural, and rigidly defined. I could "program" traffic light behavior that essentially goes { if(light === green) { go(); } else { stop();} }. I've told it in simple and rigid terms what condition to check, and how to behave based on that check. (A better program would have a lot more to check for, like signs and road conditions and pedestrians in the street, and those things will still need to be spelled out.)

An AI traffic light behavior is generated by machine-learning, which simplistically is a huge cranking machine of linear algebra which you feed training data into and it "learns" from. By "learning" I mean it's developing a complex and opaque model of parameters to fit the training data (but not over-fit). In this case the training data probably includes thousands of videos of car behavior at traffic intersections. Through parameter tweaking and model adjustment, data scientists will turn this crank over and over adjusting it to create something which, in very opaque terms, has developed a model that will guess the right behavioral output for any future scenario.

A well-trained model would be fed a green light and know to go, and a red light and know to stop, and 'green but there's a kid in the road' and know to stop. A very very well-trained model can probably do this better than my program above, because it has the capacity to be more adaptive than my rigidly-defined thing if the rigidly-defined program is missing some considerations. But if the AI model makes a wrong choice, it is significantly harder to trace down why exactly it did that.

Because again, the reason it's making this decision may be very opaque. It's like engineering a very specific plinko machine which gets tweaked to be very good at taking a road input and giving the right output. But like if that plinko machine contained millions of pegs and none of them necessarily correlated to anything to do with the road. There's possibly no "if green, go, else stop" to look for. (Maybe there is, for traffic light specifically as that is intentionally very simplistic. But a model trained to recognize written numbers for example likely contains no parameters at all that you could map to ideas a human has like "look for a rigid line in the number". The parameters may be all, to humans, meaningless.)

So, that's basics. Here are some categories of things which get called AI:

"AI" which is just genuinely not AI

There's plenty of software that follows a normal, procedural program defined rigidly, with no linear algebra model training, that companies would love to brand as "AI" because it sounds cool.

Something like motion detection/tracking might be sold as artificially intelligent. But under the covers that can be done as simply as "if some range of pixels changes color by a certain amount, flag as motion"

2. AI which IS genuinely AI, but is not the kind of AI everyone is talking about right now

"AI", by which I mean machine learning using linear algebra, is very good at being fed a lot of training data, and then coming up with an ability to go and categorize real information.

The AI technology that looks at cells and determines whether they're cancer or not, that is using this technology. OCR (Optical Character Recognition) is the technology that can take an image of hand-written text and transcribe it. Again, it's using linear algebra, so yes it's AI.

Many other such examples exist, and have been around for quite a good number of years. They share the genre of technology, which is machine learning models, but these are not the Large Language Model Generative AI that is all over the media. Criticizing these would be like criticizing airplanes when you're actually mad at military drones. It's the same "makes fly in the air" technology but their impact is very different.

3. The AI we ARE talking about. "Chat-gpt" type of Generative AI which uses LLMs ("Large Language Models")

If there was one word I wish people would know in all this, it's LLM (Large Language Model). This describes the KIND of machine learning model that Chat-GPT/midjourney/stablediffusion are fueled by. They're so extremely powerfully trained on human language that they can take an input of conversational language and create a predictive output that is human coherent. (I am less certain what additional technology fuels art-creation, specifically, but considering the AI art generation has risen hand-in-hand with the advent of powerful LLM, I'm at least confident in saying it is still corely LLM).

This technology isn't exactly brand new (predictive text has been using it, but more like the mostly innocent and much less successful older sibling of some celebrity, who no one really thinks about.) But the scale and power of LLM-based AI technology is what is new with Chat-GPT.

This is the generative AI, and even better, the large language model generative AI.

(Data scientists, feel free to add on or correct anything.)

Hi, Tumblr. It’s Tumblr. We’re working on some things that we want to share with you.

AI companies are acquiring content across the internet for a variety of purposes in all sorts of ways. There are currently very few regulations giving individuals control over how their content is used by AI platforms. Proposed regulations around the world, like the European Union’s AI Act, would give individuals more control over whether and how their content is utilized by this emerging technology. We support this right regardless of geographic location, so we’re releasing a toggle to opt out of sharing content from your public blogs with third parties, including AI platforms that use this content for model training. We’re also working with partners to ensure you have as much control as possible regarding what content is used.

Here are the important details:

We already discourage AI crawlers from gathering content from Tumblr and will continue to do so, save for those with which we partner.

We want to represent all of you on Tumblr and ensure that protections are in place for how your content is used. We are committed to making sure our partners respect those decisions.

To opt out of sharing your public blogs’ content with third parties, visit each of your public blogs’ blog settings via the web interface and toggle on the “Prevent third-party sharing” option.

For instructions on how to opt out using the latest version of the app, please visit this Help Center doc.

Please note: If you’ve already chosen to discourage search crawling of your blog in your settings, we’ve automatically enabled the “Prevent third-party sharing” option.

If you have concerns, please read through the Help Center doc linked above and contact us via Support if you still have questions.

The IOF are a bunch of pedophiles

Link to original post on Instagram (X)

Israel is filled with these pricks and they're not only targeting Palestinian children, but Israeli children as well.

From instructions on how to opt out, look at the official staff post on the topic. It also gives more information on Tumblr's new policies. If you are opting out, remember to opt out each separate blog individually.

Please reblog this post, so it will get more votes!