Robotics - Tumblr Posts

Some experimental art and a little poem accompanying it too! (How quaint) Very much based off IHNMAIMS I had such fun with this one. I hope you enjoy <3

There's many thoughts within that head.

His body, gone.

Shriveled, dead.

Will he ever rekindle a self?

A self he once was rich enough to save.

Will he ever be able to squirm?

Dancing, writhing in freeing pain

To weep and squall

To die and burn

It's a grizzly fate, a horrible death

Yet how he can hope and yearn.

All he can do, all he can manage

Is spout numbers and noises

It is a queasy and hopeless marriage.

-Fransteahouse

drew more gabbie, say hello to her cool mech suit

you won’t be saying that if you somehow retain your life and free will after they take over...

This terrifying eel-robot will perform maintenance on undersea equipment

Nope.

🤖✨ Introducing a stark vision of a not-so-distant future where robotic supremacy begins with the smallest of steps. Pictured here is a mechanical marvel—a robotic bird, intricately designed, not just mimicking but replacing its organic counterparts. This robotic bird symbolizes the first phase of robots taking over, strategically reducing the numbers of real birds to ensure their own dominance. It’s a thought-provoking blend of technology and nature, a snapshot of a possible future where artificial life forms could overshadow the natural. Is this the beginning of an era where robots reign supreme?

🌍💡 This piece invites us to ponder the balance between technological advancement and the natural world. It’s not just art; it’s a narrative captured in a single moment, a conversation starter about our technological trajectory and its potential impact on the natural order.

Girl in the Machine

Basically the most technically complex digital image I've ever made. At some point I'd like to blog through the steps I took to make it.

Strange dream

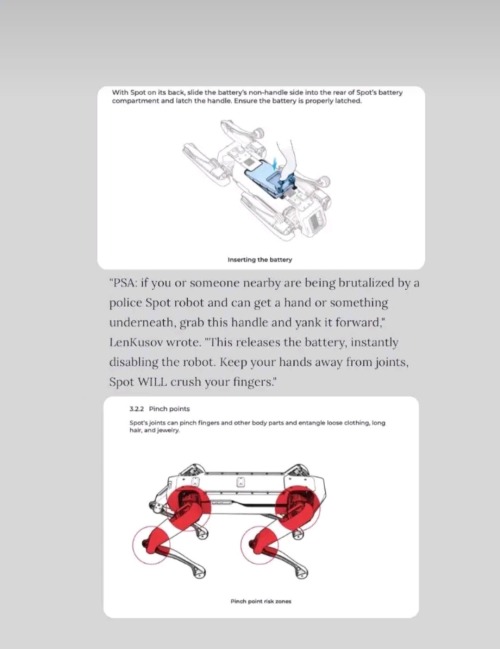

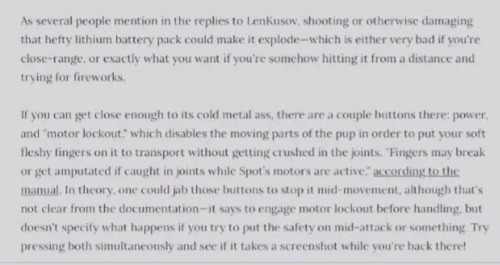

The tools of oppression will advance, you're knowledge of how counter them should also advance.

It works!*

So I (FINALLY) put the final touches on the software for my robot PROTO! (Listen, I am a software person, not a coming-up-with-names person)

Basically, it is a ESP32 running him. He takes HTTP messages. Either GET odometry, or PUT twist. Both just being a string containing comma separated numbers

Odometry is the robots best guess based on internal sensors where it is (Since PROTO uses stepper motors, which rotates in tiny tiny steps... it is basically counting the steps each motor takes)

Twist is speed, both in x,y and z directions, and speed in angular directions (pitch, roll and yaw). This is used to tell the robot how to move

Now, since PROTO is a robot on two wheels, with a third free-running ball ahead of him, he cannot slide to the side, or go straight up in the air. You can TRY telling him to do that, but he will not understand what you mean. Same with angular movement. PROTO can turn left or right, but he have no clue what you mean if you tell him to bend forward, or roll over.

The software is layered (Which I use a BDD diagram to plan. I love diagrams!)

Basically PROTO gets a twist command and hands that over to the Differential_Movement_Model layer.

The Differential_Movement_Model layer translate that to linear momentum (how much to move forward and backwards) and angular momentum (how much to turn left or right). combines them, and orders each wheel to move so and so fast via the Stepper_Motors layer.

The Stepper_Motors turns the wanted speed, into how many steps each stepper motor will have to do per second, and makes sure that the wanted speed can be achieved by the motors. It also makes sure that the wheels turn the right way, no matter how they are mounted (In PROTO's case, if both wheels turn clockwise, the right wheel is going forward, and the left backwards.). It then sends this steps per second request down to the Peripheral_Hub layer.

The Peripheral_Hub layer is just a hub... as the name implies, it calls the needed driver functions to turn off/on pins, have timers count steps and run a PWM (Pulse-width modulation. It sends pulses of a particular size at a specific frequency) signal to the driver boards.

Layering it, also means it is a lot easer to test a layer. Basically, if I want to test, I change 1 variable in the build files and a mock layer is build underneath whatever layer I want to test.

So if I want to test the Stepper_Motors layer, I have a mock Peripheral_Hub layer, so if there are errors in the Peripheral_Hub layer, these do not show up when I am testing the stepper motor layer.

The HTTP server part is basically a standard ESP32 example server, where I have removed all the HTTP call handlers, and made my own 2 instead. Done done.

So since the software works... of course I am immediately having hardware problems. The stepper motors are not NEARLY as strong as they need to be... have to figure something out... maybe they are not getting the power they need... or I need smaller wheels... or I will have to buy a gearbox to make them slower but stronger... in which case I should proberbly also fix the freaking cannot-change-the-micro-stepping problem with the driver boards, since otherwise PROTO will go from a max speed of 0.3 meters per second, to most likely 0.06 meters per second which... is... a bit slow...

But software works! And PROTO can happily move his wheels and pretend he is driving somewhere when on his maintenance stand (Yes. it LOOKS like 2 empty cardboard boxes, but I am telling you it is a maintenance stand... since it sounds a lot better :p )

I have gone over everything really quickly in this post... if someone wants me to cover a part of PROTO, just comment which one, and I will most likely do it (I have lost all sense of which parts of this project is interesting to people who are not doing the project)

What's your favourite fact?

Don't really have many favorite things.

But I will tell you the best one I can think of right now:

"Human eyeballs are all the exact same size. "

Now. In fact, they grow a little bit ra little while after birth. And "Exactly the same size" means "Within 1-2mm" but I think it counts.

Why do I know that fact? Because I make robots. And robots can get the distance to something on the camera IF they know the true size of an object next to the target.

And eyes are quite easy to spot with peogramming. So robots use all the human eyeballs to constantly calibrate their distance measurements.

Now THAT is a neat fact!

I made a boy!!

Just take my money dammit!

Video: MorpHex MKI

this is what happens when you go too deep into the heart of twitter:

my reaction:

also bonus:

Robotboy model 1 to model 9

Protoboy model 1 to model 2